Exporting for Inochi2D

Inochi2D is a free and open source 2D animation framework similar to Live2D, currently in beta phase. We can already use it for VTuber activities using their official streaming app, and a discussion regarding implementation of this framework in Ren'Py is already started by both Inochi2D and Ren'Py developers. You can learn more about the project here, follow their Twitter account here, or join their Discord community here.

Please note that Mannequin Character Generator is not related with Inochi2D. If you want to support Inochi2D for the betterment of both the game development and VTuber industry as a whole, we strongly recommend donating via their Patreon, GitHub Sponsors, or contributing codes and translations to the GitHub repository.

To edit Inochi2D animations, use their official animation editor app (Inochi Creator) which can be downloaded from itch.io or GitHub.

For VTuber livestreaming, use their official livestreaming app (Inochi Session) which can be downloaded from itch.io or GitHub. We strongly recommend using the nightly builds of Inochi Session if you plan to use characters exported by Mannequin Character Generator. These nightly builds of Inochi Session can be downloaded from here.

Both Inochi2D and the Inochi2D export feature in Mannequin Character Generator is still in beta. Backup your character often (especially your exported .inx file) and expect a lot of changes happening quickly as both Inochi2D and Mannequin Character Generator develops further!

Video Tutorials

We have made some video tutorials explaining this export feature: Free 2D VTuber Livestreaming with Mannequin and Puppetstring!

Choosing Templates for Inochi2D Export

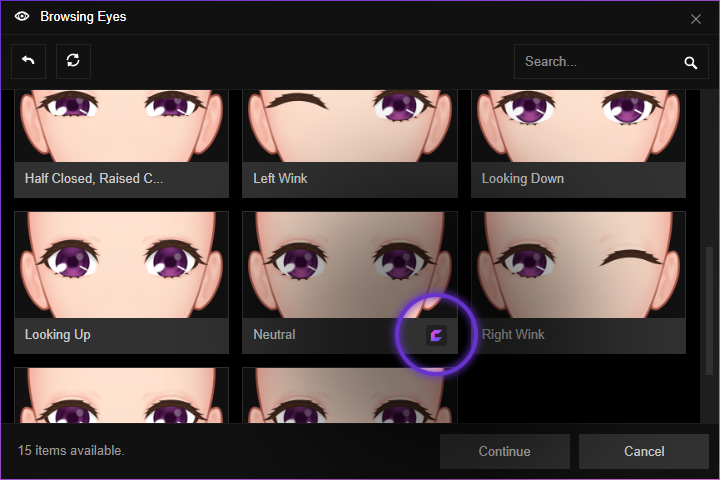

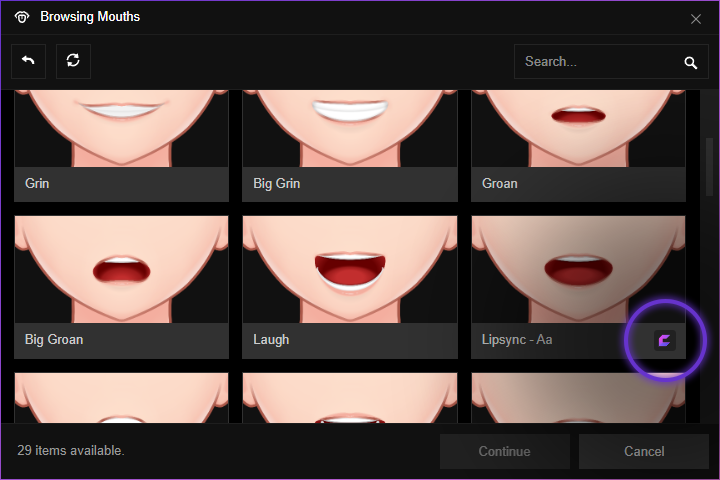

To export pre-rigged characters with head turn, eye gaze, blink and lip sync already set up, choose pose, clothing or body parts templates which are pre-rigged. Templates which are pre-rigged for Inochi2D are marked with Inochi Creator icon.

You need to use these marked templates for the following aspects of your character in order to generate a fully rigged output:

- Pose

- Head

- Ear

- Hair (every hair parts from Primary Hairstyle, Bangs, to Additional Hair Parts)

- Nose

- Brow

- Eye

- Mouth

- Face Colors

- Clothing

After making sure you have chosen proper templates for the aspects mentioned above, simply choose the Inochi Creator (.inx) format option when exporting your character. Now your exported character will have parameters already generated when opened with Inochi Creator.

Using Inochi Creator to Pose Your Character and Create Static Images

Are you using Mannequin Character Generator for Visual Novel development only, and not interested in VTuber livestreaming? You can still get some benefit from Inochi2D export by adjusting your character's pose further via Inochi Creator before exporting to a PNG image to use in your preferred game engine.

To do this, first make sure you have downloaded the latest version of Inochi Creator which can be obtained here. Scroll to the bottom and search for Evaluation Version to download the free version of Inochi Creator. Feel free to use it without any limitation, but if you have found Inochi Creator to be useful, we recommend buying it via itch.io to support its development!

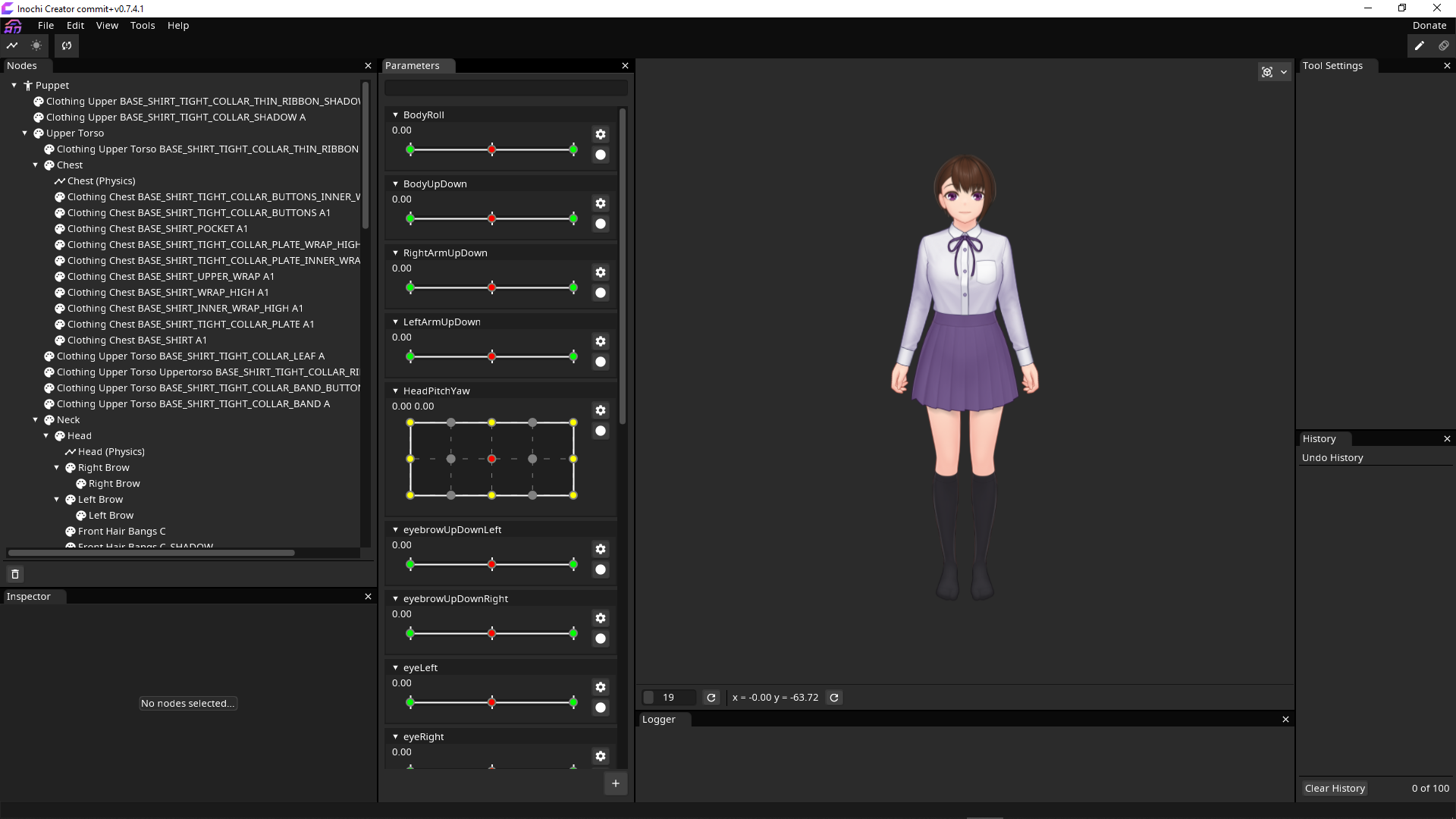

After opening your exported .inx file in Inochi Creator, you can adjust your character's pose by using the sliders available in the Parameters panel. If the panel is not there, open the View menu and click Parameters to show it.

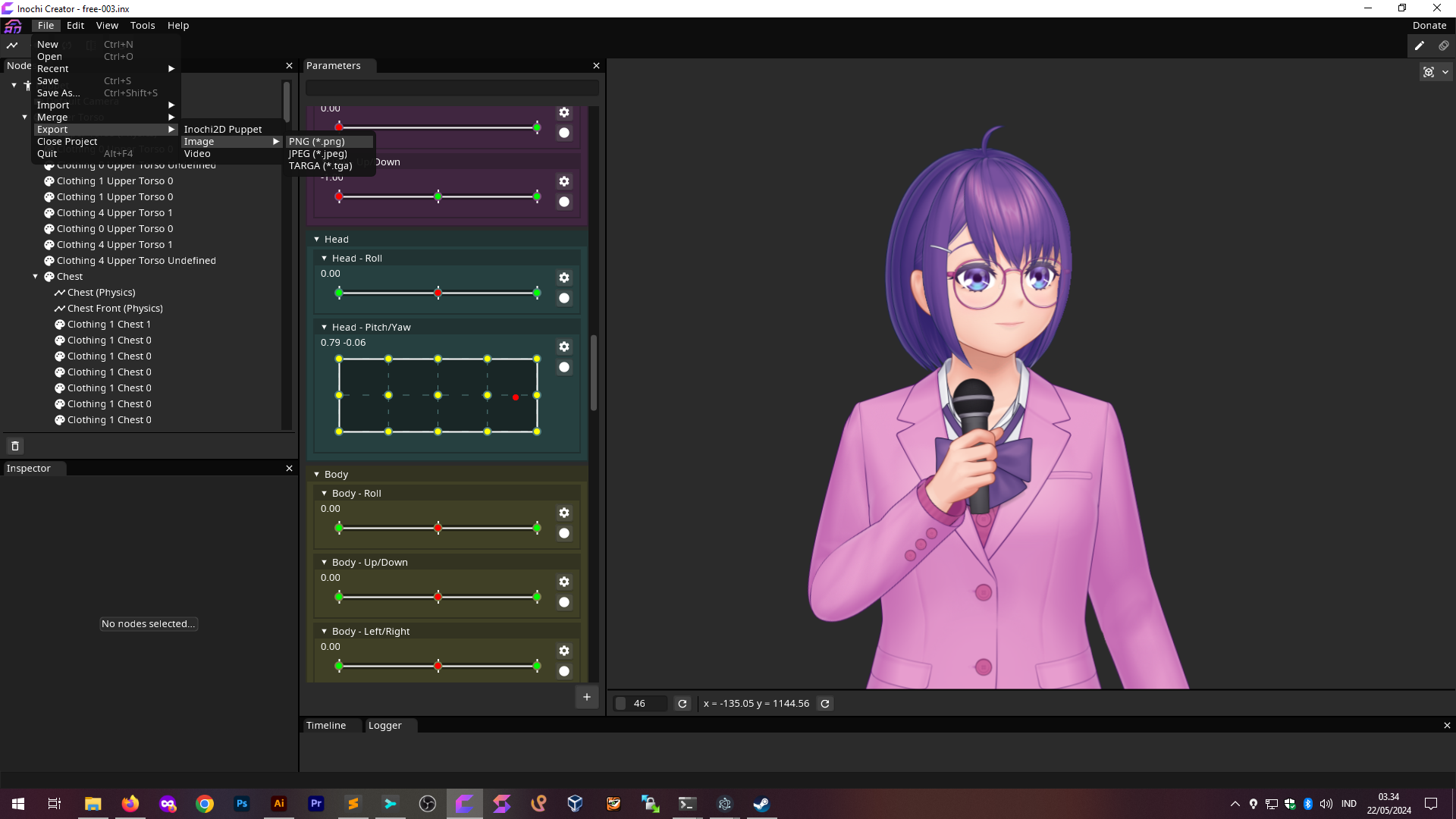

Once you're done posing your character, open the File menu, and choose Export -> Image -> PNG to export your character into PNG image format. Make sure to check 'Allow Transparency' to output PNG image with transparent background!

Using Pre-rigged Inochi2D Export for Livestreaming

In order to use your pre-rigged character for VTuber livestreaming, first you'll need:

- The latest nightly builds of Inochi Session which can be downloaded from here (this is temporary, if Inochi Session 0.5.5 is already out you can use that version instead).

- The latest version of Open Broadcaster Software (OBS) which can be downloaded from here.

- The latest version of OBS Spout2 Plugin which can be downloaded from here.

- Either one of these:

- A webcam and the latest version of Puppetstring which can be downloaded from here.

- A smartphone with VTube Studio installed. You can get VTube Studio for Android here, or get the iOS version here.

When exporting to .inx in Mannequin Character Generator, make sure you've chosen your preferred tracking method (webcam or smartphone) before proceeding!

Setting Up Webcam Tracking for Inochi Session using Puppetstring (Recommended New Method)

If you prefer to use smartphone tracking instead of webcam, then skip this and follow the VTube Studio guide below.

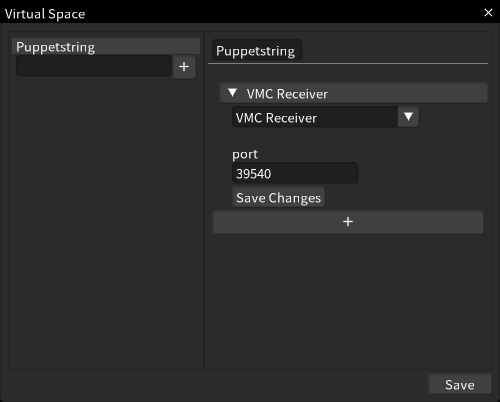

To set up Puppetstring tracking in Inochi Session, configure the virtual space by opening View -> Virtual Space. In the Virtual Space window that shows up, enter your desired virtual space name in the input field ("Default", for example). Then, click the + button to create a new virtual space.

After creating the virtual space, the next step is to add Puppetstring as a tracker. To do this, select the virtual space that you've just created, and in the right side of Virtual Space window you will see another + button. Click this button, and choose VMC in the drop-down menu that shows up. In the Port field, enter 39540.

After that, click Save Changes and close the window.

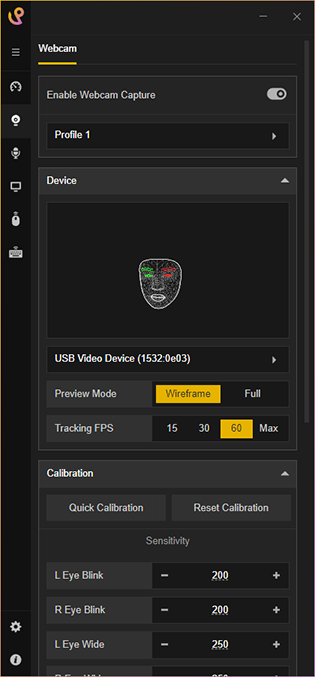

Now it's time to configure Puppetstring so it can properly track your face. To do this, open the Webcam menu in Puppetstring by clicking its icon in the sidebar. After that, you can turn it on by making sure the toggle Enable Webcam Capture is on.

You can adjust Tracking FPS from 15 to Max to get more detailed movement at the cost of more sytem resource consumption. We don't recommend Max as it's there for testing purposes only and mostly results in placebo effect.

Sometimes, your webcam placement is not ideal (too far up/down, or slightly misaligned to the left/right). This can result in your character permanently looking to a certain direction. To fix this problem, use the Quick Calibration feature by clicking the button, and keeping your head position in your intended 'Neutral' position for 10 seconds.

Setting Up Webcam Tracking for Inochi Session using OpenSeeFace (Old Method, Not Recommended)

We have developed a more user-friendly face tracker app for Inochi Session: Puppetstring VTuber Tracking. OpenSeeFace is no longer recommended for webcam tracking. If you prefer to use smartphone tracking instead of webcam, then skip this and follow the VTube Studio guide below.

Before continuing make sure you have downloaded OpenSeeFace which can be found here.

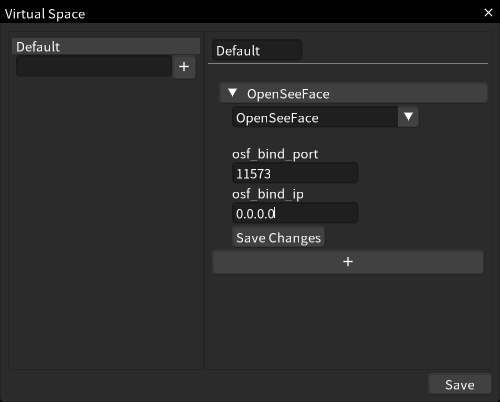

To set up OpenSeeFace tracking in Inochi Session, configure the virtual space by opening View -> Virtual Space. In the Virtual Space window that shows up, enter your desired virtual space name in the input field ("Default", for example). Then, click the + button to create a new virtual space.

After creating the virtual space, the next step is to add OpenSeeFace as a tracker. To do this, select the virtual space that you've just created, and in the right side of Virtual Space window you will see another + button. Click this button, and choose OpenSeeFace in the drop-down menu that shows up.

- For

osf_bind_port, enter11573 - For

osf_bind_ip, enter0.0.0.0

After that, click Save Changes and close the window.

Now it's time to run OpenSeeFace so it can start tracking your movement via the webcam. To do this, first extract the ZIP file that you got from the GitHub link above. Open the extracted folder, then open the Binary subfolder. Inside, you'll find facetracker.exe and run.bat. If you want to do some set-up first (choosing framerate, resolution, or if you have multiple webcam connected to your system) then open run.bat. Otherwise, you can open facetracker.exe for instant use. For Linux users, you can follow this guide.

Setting Up Smartphone Tracking for Inochi Session using VTube Studio

If you prefer to use webcam tracking instead of smartphone, then follow the OpenSeeFace guide above and skip this.

To set up VTube Studio tracking in Inochi Session, configure the virtual space by opening View -> Virtual Space. In the Virtual Space window that shows up, enter your desired virtual space name in the input field ("Default", for example). Then, click the + button to create a new virtual space.

After creating the virtual space, the next step is to add VTube Studio as a tracker. To do this, select the virtual space that you've just created, and in the right side of Virtual Space window you will see another + button. Click this button, and choose VTube Studio in the drop-down menu that shows up.

Open VTube Studio on your smartphone, and tap the gear icon. Scroll to the bottom to find 3rd Party PC Clients section. Toggle Activate on, and click the Show IP List button. Enter the IP address shown into the phoneIP field in Inochi Session. For pollingFactor, enter 1. Make sure both your computer and your smartphone are connected into the same local network (LAN/WiFi).

After that, click Save Changes and close the window.

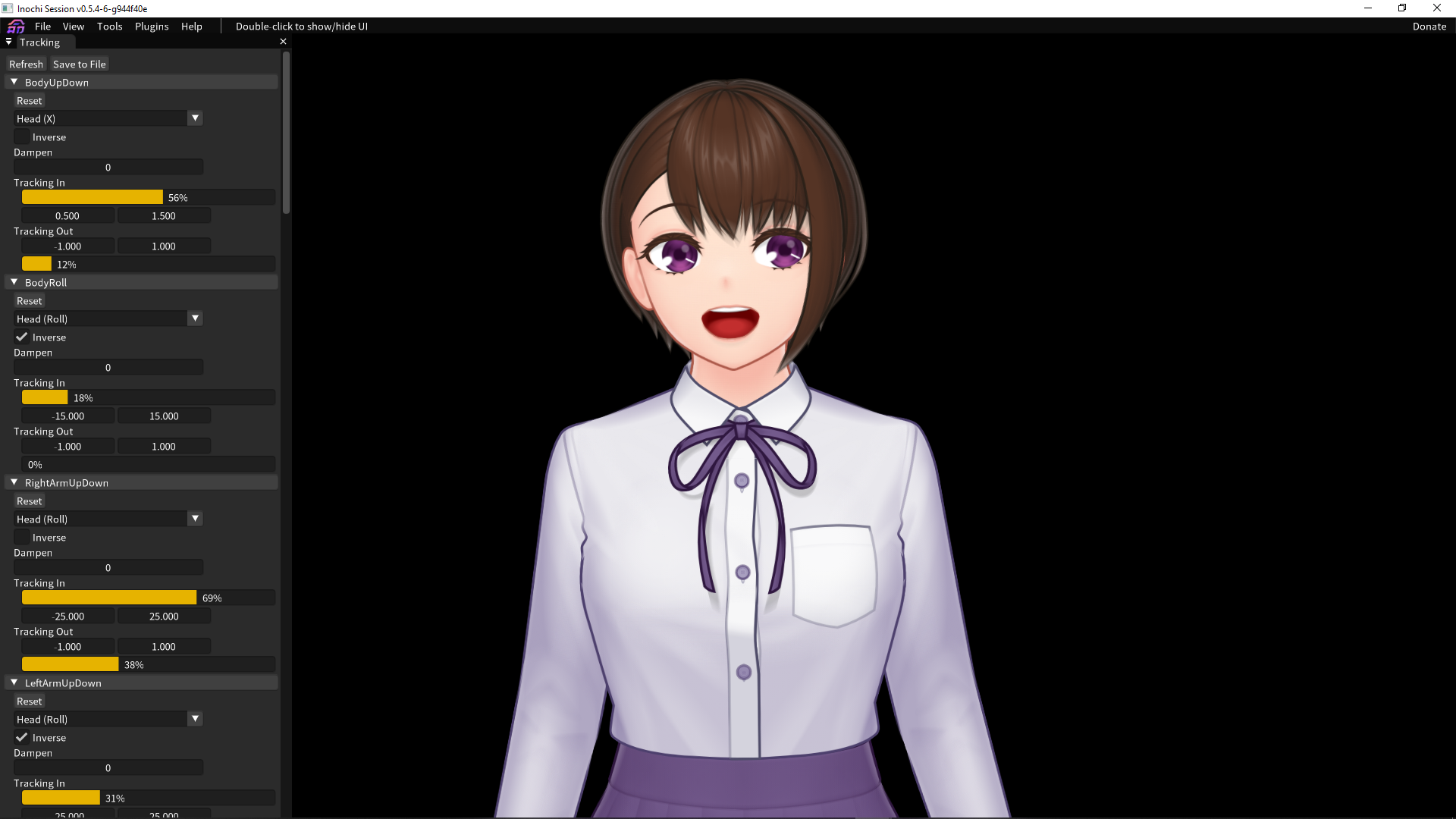

Loading Your Character to Inochi Session

To load your previously exported character to Inochi Session, just drag and drop the correspoding .inx file from file manager/explorer to Inochi Session window. If OpenSeeFace has already set up by following previous steps above, your character should immediately move according to the face tracker.

To reposition your character, just click and drag across the Inochi Session window. To zoom in/out, click and hold your character, and use mouse scroll.

If you are experiencing trailing images glitch in Inochi Session, turning off Post Processing might help.

Sending Video Data from Inochi Session to OBS

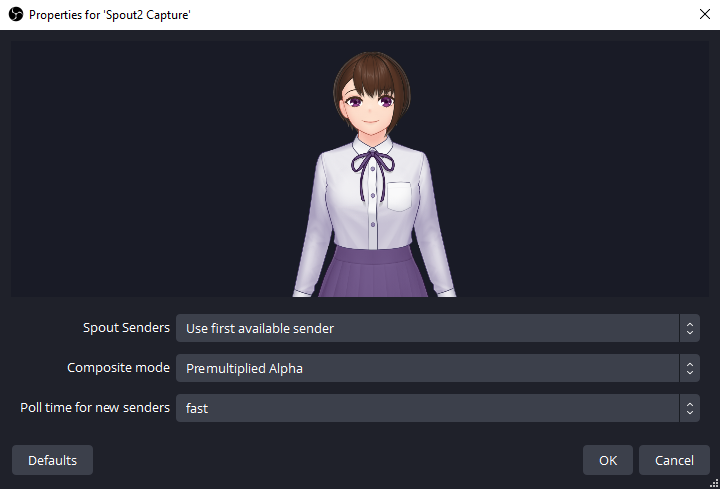

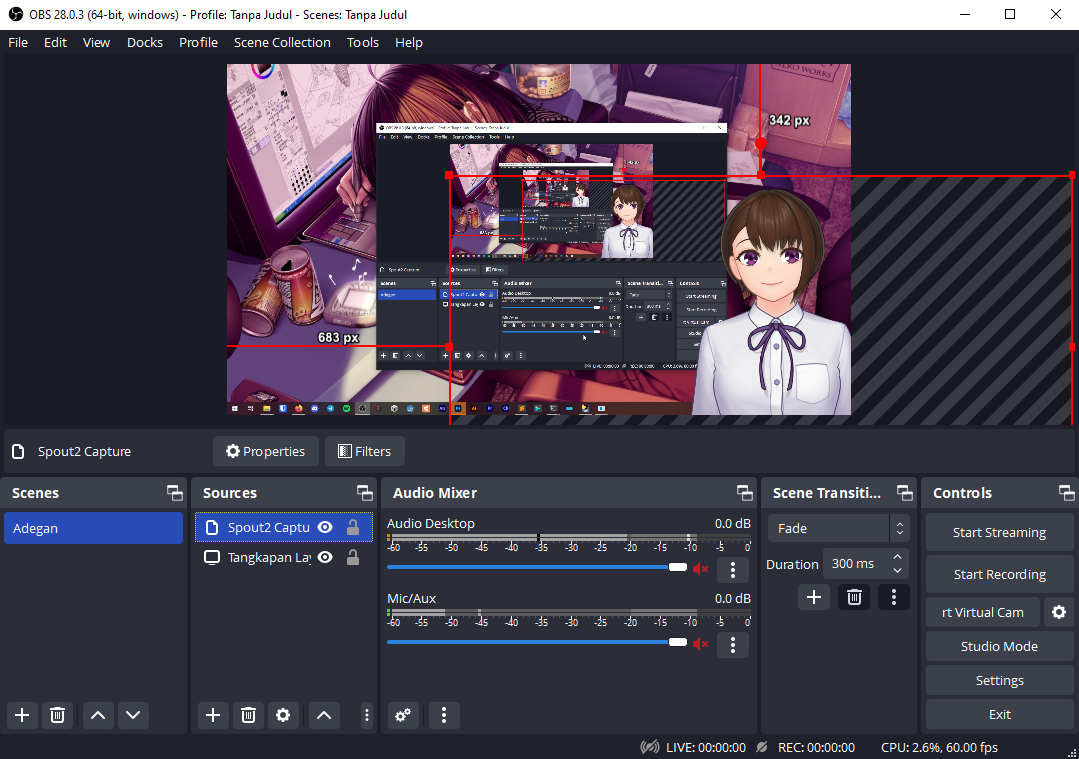

Now that your character is already moving in Inochi Session according to the face tracker, the next step is displaying the output into OBS. First, make sure you have already installed the OBS Spout2 Plugin from the GitHub link above. Then, add a new source in OBS and choose Spout2 Capture.

In the Properties window that shows up, use these values in the drop-down menu fields:

- For

Spout Senders, chooseInochi Session(or if you're not running another app which also uses Spout2, you can chooseUse first available sender) - For

Composite Mode, choosePremultiplied Alpha

After that, click OK. Your character will now appear in the preview, with transparent background, ready to be composited to your stream!

Additional Tips

In order to improve performance in Inochi Session, consider doing these:

- Disable post processing effects by opening the Scene Settings panel

View -> Scene Settingsand uncheckPost Processing. - Resize Inochi Session window to a smaller size.

- Use

.inpfile instead of.inxfile generated by Mannequin Character Generator. To generate an.inpfile from your existing.inxfile, open the corresponding file in Inochi Creator, and then openFile -> Export -> Inochi2D Puppet. In theExport Optionswindow that shows up, click theResolutiondrop-down menu and choose4096x4096for optimal result.

If you want to customize Mannequin Character Generator's exported .inx file further using Inochi Creator, you can learn more about how to use it here.